Large-scale Broadcast Event Usability and Accessibility

11/28/2018 1:13:15 PM

By Kris Schulze, MNIT Experience IT program manager

This past year, Experience IT and the Office of Accessibility at Minnesota IT Services (MNIT) helped test options to conduct large-scale broadcast events for 1,000 to10,000 attendees. Our question: How do we best host a broadcast event for all 2,300 MNIT staff while planning for inclusion from the start?

This issue arose in part because we discovered licensing limits with our default web presentation tools, Cisco WebEx and Microsoft Skype for Business. We needed a tool that would economically reach 2,300 attendees, and would allow captions, live video, PowerPoint content, and live Question and Answer (Q&A). We wanted an experience for ALL our end users that was easy to use and provided decent video and audio quality.

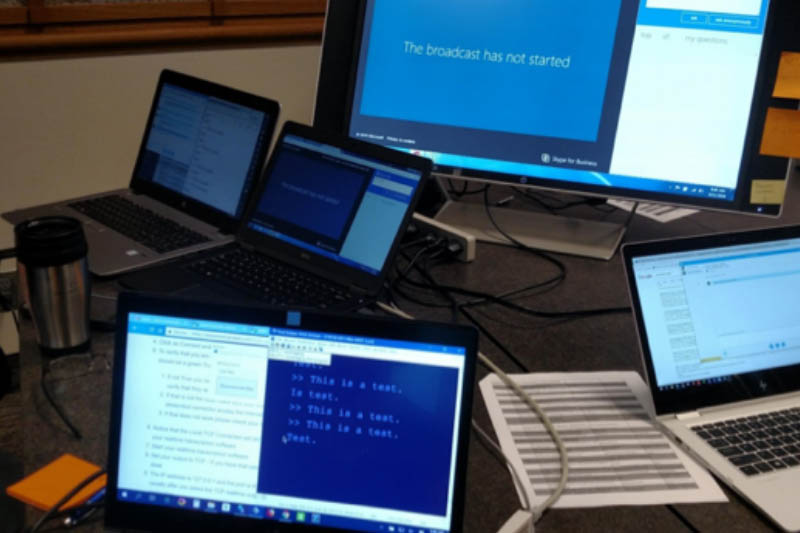

During this time, Microsoft’s Skype Meeting Broadcast entered the picture as an option. We held multiple live test events to learn the technology, determine the best way to provide captioning, validate network and firewall settings at multiple locations around the state, and test for accessibility and usability.

Our test audiences started off small and worked up to larger numbers of people joining the test broadcasts. The initial accessibility and usability testing helped our team work out a repeatable process for hosting accessible Skype Broadcast events. With each successive test, we enlisted more and more MNIT staff to join the broadcasts. During each test event, we crowdsourced feedback from attendees to help us identify and troubleshoot issues and improve our processes before the next test. One of the trickiest issues was working out how to minimize lag time between the live broadcast and captions that were displayed in a separate caption window (find out why this was our preferred option below). End user feedback was critical in making sure we could troubleshoot any production issues and provide better instructions for users so they would have a positive experience.

Discoveries from this feedback were exciting, especially because many of the respondents were not regular users of captions or assistive technology. For example, we had just over a 50% response rate for one of our larger tests of 360 people. Of those:

Employee comments included:

“Easy to use and the ability for captions to go back and read if you missed something or didn't hear it.”

“The closed captions in a separate window were nice. I liked being able to customize the font size/color/selection as well as the background color. It was also nice to be able to see the captions at the same time as the presentation.”

“Pacing of captioning was much improved over the first Skype Broadcast test in August. It was at most one sentence ahead of the speaker.”

“Glad to see the closed caption is now synced up vs. other tests we have done. I like the fact you can move that separate browser window anywhere you want. I would recommend that information is provided in a slide before each Skype Broadcast as a standard or add a link to the email invite for any meeting in case someone joins the meeting late.”

During earlier tests we also received great feedback about general usability and captions. User feedback helped us create clearer instructions for using Skype Broadcast and captions. We included these in the meeting invitation and on the information page on the employee intranet. We also developed the practice of using the first several minutes of the broadcast to provide instruction on how to navigate the Skype Broadcast attendee panel, open the caption window, and submit questions in the Q&A panel. The feedback also highlighted difficulties with real-time captioning, minimal lead/lag time and revealed frustrations:

“The captions are still a few seconds ahead of the audio and video feeds, which makes it really annoying to read the captions. Also, it's impossible to scroll back up in the captions window, it keeps jumping down to the bottom. Even after the session had ended I couldn't scroll back up to see a sentence I missed, which was very annoying.”

“I would rather see the captions overlaying the video. Opening the captions in Chrome on Android closes the window with the video. You cannot use them if you want to see the event.”

“The closed captioning did work from the start in IE but it was frustrating as I tried to scroll up to read what I missed and it would keep dropping down as each new line was added before I could even find where I left off. I fixed that by eventually seeing the checkbox to stop the auto-scroll but it was yet another frustrating thing.”

Our primary takeaway was that people who may not have used captions discovered they were really helpful in case they missed a word or wanted to back up and re-read something. This reinforces the belief that if you design and plan for accessibility from the start, far more people than those who use assistive technology benefit from it. The second takeaway – from resolving the issues such as those listed above – is that if you provide basic instruction on how assistive technology works and encourage people to try it out, they adopt it more readily. This familiarity builds an awareness of the importance of planning for accessibility and how it creates positive user experiences for everyone as they go about their daily work. It doesn’t matter if they’re planning for an online meeting, a website, or an email. The key is to think about accessibility and usability for all up front.

Did you know that providing live, real-time captioning for a web broadcast event can be kind of tricky? You may have come across promotions for Microsoft’s auto-captioning service as part of their cloud offerings. However, it is not yet available in our Office 365 Government Community Cloud and we required a more reliable accuracy rate than it could deliver. We found our best option was to use live real-time captioning services, often termed Communication Access Real-time Translation (CART). If you would like to learn more about CART, visit our captioning information on our /mnit/about-mnit/accessibility/multimedia.jspmultimedia accessibility webpage. We also learned that it wasn’t a simple matter to provide access to those captions. Microsoft’s Skype Meeting Broadcast did provide a “cc” button – but only for the automated captions. To provide captions in a different way required a separate link.

And, did you know there is about a 30-second lag-time for broadcasts? That meant if our caption writer was in the room transcribing real-time with the presenter, our attendees would read the captions 30 seconds before they hear them on the broadcast. We found it worked best for the caption writer to caption listening to the delayed broadcast rather than from the live event. This minimized any lag or lead-time, and synched the captions more closely with what viewers were experiencing.

The separate caption window allowed users to adjust text size, font, and colors. Users were also able to resize and move the caption window, such as below or to the side of the presentation. So, although we would have loved for the captions to be built within the Broadcast panel, the customizable features were worth the separate window.

An alternative for the lead/lag-time issue would be to invest in video mixers or use a free open source software such as Open Broadcaster Software that would allow for adding captions as an overlay similar to TV captions. For the purposes of our testing, however, we used only the features that were available through Skype Meeting Broadcast.

Would you like to learn more about the accessibility work being done by Minnesota IT Services and the State of Minnesota? Once a month we will bring you more tips, articles, and ways to learn more about digital accessibility.

Accessibility